The AI landscape is constantly evolving, and DeepSeek-R1 has been making waves recently. Rumour has it this open-source model rivals OpenAI’s, yet proprietary, GPT-3.5-turbo in reasoning. I’ll refer to it as “01” in this blog, per the transcript’s convention.

Unlike OpenAI’s models, DeepSeek-R1 is truly open access. It’s free to use for fine-tuning or any other purpose. This accessibility greatly impacts the AI industry. It makes advanced AI easy to access. Over the past few days, I’ve put DeepSeek-R1 to the test on some real-world data science reasoning tasks I frequently encounter in my projects.

I wanted to share my initial observations. I also have a detailed comparison against the industry-leading OpenAI-01 model.

First Impressions of DeepSeek-R1

I think DeepSeek-R1 is better at reasoning tasks compared to ChatGPT, especially GPT-4. It’s slower due to its “thinking” time. But, its reasoning is good. The key question is, does it rival or surpass GPT-3.5-turbo (01)? It is one of the best models for complex reasoning. Could DeepSeek-R1 actually be a viable alternative to my $20 monthly OpenAI subscription? To find out, I pitted both models against each other. I used real-world, open-ended data science questions. I compared their accuracy, clarity, and thoughtfulness side by side.

Why DeepSeek-R1 is So Intriguing

DeepSeek-R1’s excitement comes from its unique training method. DeepSeek-R1 uses reinforcement learning. Unlike it, OpenAI’s models depend on costly, human-labeled data and supervised fine-tuning. This approach enables the model to learn reasoning tasks naturally. Over time, it refines its thinking and experiences “aha” moments on its own. This achievement is remarkable. It may offer a more sustainable path for AI development.

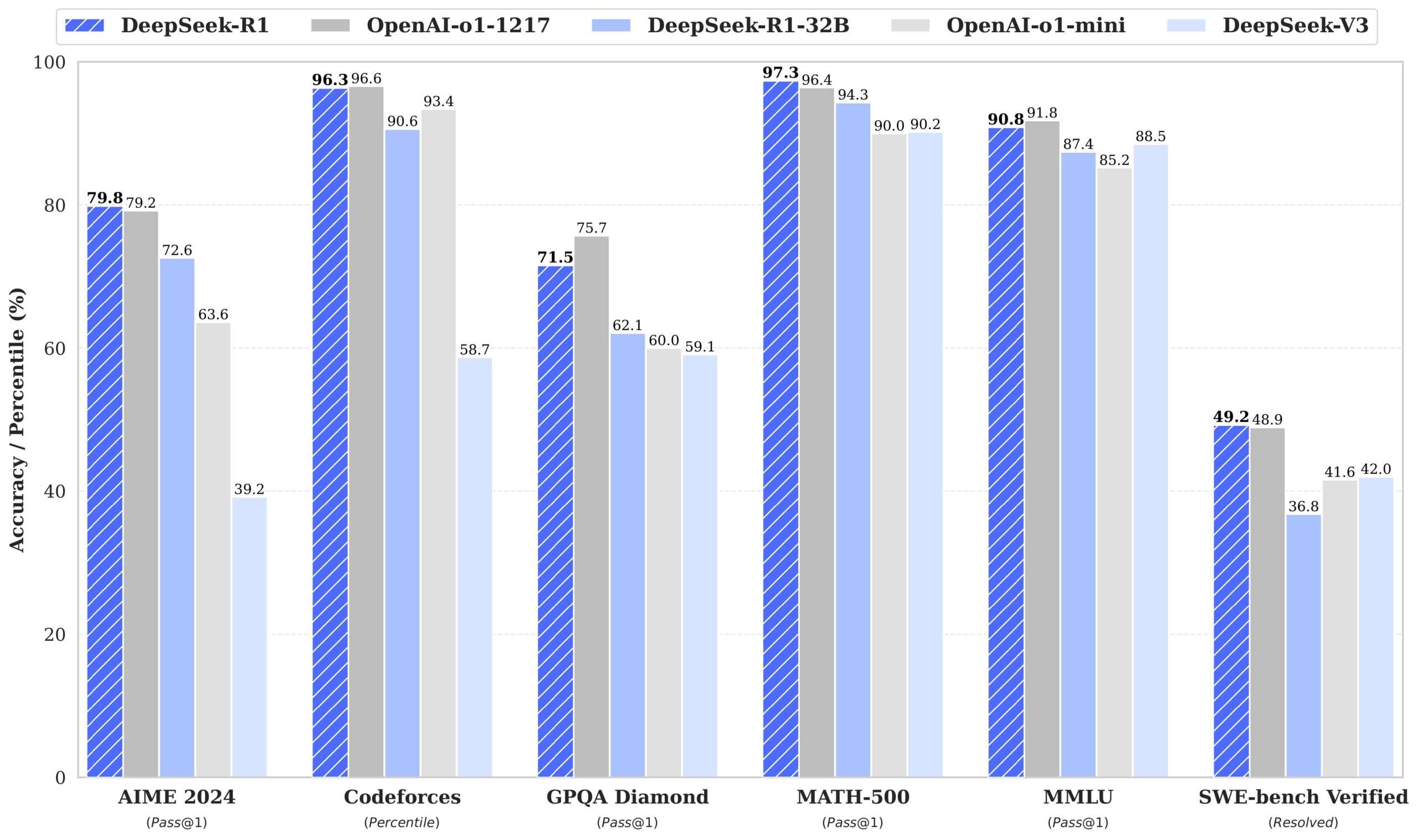

Benchmark results further fuel the intrigue. DeepSeek-R1 excels in mathematical reasoning, even slightly outperforming OpenAI-01 on certain benchmarks. It also matches OpenAI-01 in coding and software tasks. It performs well on knowledge tests like MMLU and GQA. DeepSeek-R1 is very impressive. It has a slight edge in some areas.

Accessing and Using DeepSeek-R1

Getting started with DeepSeek-R1 is surprisingly easy. You can use their web interface at chat.deepseek.com. Simply create an account or log in with your Gmail account. In the chat interface, select the DeepSeek-R1 model. It’s better than the default DeepSeek-V3.

To integrate DeepSeek-R1 into your apps or workflows, use the DeepSeek API. It’s a cheaper alternative to OpenAI’s API.

As a privacy-conscious user, I can run DeepSeek-R1 locally using tools like llama.cpp. However, the full DeepSeek-R1 model has 671 billion parameters. It needs over 400GB of disk space and a lot of computing power. Someone online ran it on eight stacked MacBook Pro minis. But, it’s not a practical setup for most.

DeepSeek provides distilled models with fewer parameters. They offer versions with 7 billion and 14 billion parameters. They run on more modest hardware. These distilled models are fine-tuned versions of other models, like Llama 2. They use datasets sampled from the DeepSeek-R1 model. They offer a good balance between performance and resource requirements.

I used llama.cpp to download and run the 14 billion parameter version on my laptop. You can run the model from the terminal. But, I recommend Oobabooga’s text-generation-webui for a better interface. This offline AI platform supports llama.cpp and other local LLMs. It makes experimentation convenient and private. It automatically connects to your local models, providing a seamless experience.

Test Question #1: Data Cleaning and Preprocessing

My first test question focused on a common data science challenge:

Your dataset contains retail transactions. It has missing values, outliers, and inconsistent formats. Provide a systematic approach to clean and preprocess the data before any analysis.

I posed this question to both DeepSeek-R1 and OpenAI-01 simultaneously. DeepSeek-R1’s “thinking” process was fascinating to observe. It explained its steps. It looked at ways to deal with missing values and outliers before deciding. This felt very natural and almost like collaborating with a human data scientist.

While ChatGPT-01 responded quickly, its answer lacked the step-by-step clarity of DeepSeek-R1. However, the 01 model did provide a more complete framework. It included steps like understanding the business context. It also outlined next steps for analysis and modeling. DeepSeek-R1 omitted these.

Both answers were satisfactory, but ChatGPT-01 gave a more detailed response. Its wording was also more verbose. In contrast, DeepSeek-R1’s answer was concise and direct. I prefer the directness, but the extra context from ChatGPT-01 can be valuable.

Test Question #2: Coding Challenge

Next, I tested their coding abilities with a straightforward prompt:

You have a customer risk dataset. Can you write Python code to visualize transaction amounts for customers by risk category? The graphs should be good-looking and have a consistent style.

DeepSeek-R1 expressed its thought process again. It considered data loading, visualization techniques, and handling outliers before generating the code. Unfortunately, there was a minor error in the code ([pd.np](http://pd.np)), necessitating a manual fix. After correcting the import statement, the code successfully created a box plot. This chart showed the transaction amount by risk category. It included median values and outliers.

ChatGPT-01 created error-free code. It produced two visualizations: a bar chart and a box plot. The bar chart showed the average transaction amount per risk category. The box plot showed the distribution. I preferred this approach. It clearly showed both the average and the distribution of the data. DeepSeek-R1 combined everything into one box plot. While this wasn’t incorrect, it was less insightful.

In this coding challenge, ChatGPT-01 had a slight edge. Its code was error-free and its visualizations were more insightful.

Test Question #3: Detecting Subtle Data Misrepresentation

My final test involved a real-world example of data misrepresentation. It was a graph used by Purdue Pharma to downplay the addictive nature of OxyContin. This graph used a logarithmic scale. It smoothed out fluctuations in blood plasma levels. This created a misleading impression of the drug’s effects.

I presented the graph to both models with the question:

What’s wrong with this graph when interpreted as showing less fluctuations?

ChatGPT-01 correctly identified the use of a logarithmic scale. It explained how it compressed higher values. This made the difference between peaks and valleys appear smaller. I was genuinely impressed by its ability to spot this subtle manipulation.

DeepSeek-R1, unfortunately, missed the mark. It focused on a steady decline without peaks and no drug comparisons. While these points were valid, they didn’t address the core issue of the logarithmic scale.

The test revealed a clear difference in how well the models analysed and understood visual information. ChatGPT-01 did an outstanding job. In contrast, DeepSeek-R1 had trouble with the nuances of the graph.

Conclusions and Final Thoughts

After testing DeepSeek-R1 on these open-ended data science questions, I’m genuinely impressed. For many tasks, its reasoning abilities are on par with OpenAI-01. I particularly appreciate its step-by-step thinking process, which feels more collaborative and user-focused. This powerful, open-source model is freely available. It’s a big step toward democratizing AI. This development excites me. I do not fear AI taking over the world. I worry about large tech companies monopolizing advanced AI.

However, ChatGPT-01 still has some advantages. It is better at coding tasks and nuanced visual analysis, as shown in the graph test. So, will I cancel my OpenAI subscription? Not just yet. But, DeepSeek-R1 is a strong free alternative. It is for data scientists and researchers. They want a powerful, low-cost reasoning engine. It’s a game-changer for access to cutting-edge AI.

If you’re interested in exploring DeepSeek-R1, I highly recommend giving it a try. It’s a fantastic resource. Its open-source nature makes it a valuable tool for the AI community. The future of AI is bright. Open-source models like DeepSeek-R1 will shape it.

Read Also:

Lingaro is hiring data engineer; here is how you can apply

Adobe is Hiring Software Engineers (Up to ₹12 LPA) | 0-1 years