Real-time data processing is more than just a buzzword—it’s a necessity in today’s fast-paced, data-driven world. Organizations rely on real-time insights to stay competitive, enhance decision-making, and provide unparalleled customer experiences. But managing real-time data processing isn’t without challenges. This guide covers everything from understanding the basics to implementing effective strategies and tools.

1. What Is Real-Time Data Processing?

- Definition and Core Concepts:

Real-time data processing refers to the continuous input, processing, and output of data within milliseconds. Unlike batch processing, where data is collected over time and processed later, real-time processing happens instantly. - Differences Between Real-Time and Batch Processing:

- Batch Processing: Processes large volumes of data at scheduled intervals.

- Real-Time Processing: Processes data as it arrives, offering immediate insights.

- Examples of Real-Time Applications:

- Monitoring stock prices in financial markets.

- Delivering personalized recommendations in e-commerce.

- Detecting fraud in banking transactions.

2. Benefits of Real-Time Data Processing

- Faster Decision-Making:

Real-time data allows organizations to make decisions quickly, reducing response times and improving outcomes. - Improved Operational Efficiency:

Automating data processing workflows minimizes manual intervention and streamlines operations. - Enhanced Customer Experiences:

Personalized interactions based on real-time data increase customer satisfaction and retention. - Increased Scalability:

With the right architecture, real-time systems can handle growing data volumes without compromising performance.

3. Key Challenges in Managing Real-Time Data Processing

- Handling High Data Velocity:

Managing the speed at which data flows through systems is critical. High-velocity data streams can overwhelm traditional systems. - Ensuring Data Accuracy and Integrity:

Errors in real-time data can lead to flawed decisions. Validation and cleansing mechanisms are essential. - Managing Resource Consumption and Costs:

Real-time systems often require significant computational and storage resources, driving up costs. - Maintaining System Reliability:

Downtime or lags in processing can disrupt operations, emphasizing the need for robust and fault-tolerant systems.

4. Tools and Technologies for Real-Time Data Processing

- Popular Platforms:

- Apache Kafka: A distributed event-streaming platform ideal for high-throughput use cases.

- Apache Flink: Offers low-latency, distributed stream processing.

- Spark Streaming: Processes real-time data using Apache Spark’s analytics engine.

- Key Features to Look For in Tools:

- Scalability to handle growing data volumes.

- Support for distributed processing.

- Compatibility with various data sources and formats.

- Integrating Real-Time Tools into Existing Systems:

Seamless integration is key. Use APIs and middleware solutions to connect real-time tools with legacy systems.

5. Strategies for Efficient Real-Time Data Management

- Prioritizing Critical Data Streams:

Not all data needs real-time processing. Focus on high-priority streams to optimize resources. - Implementing Robust Data Pipelines:

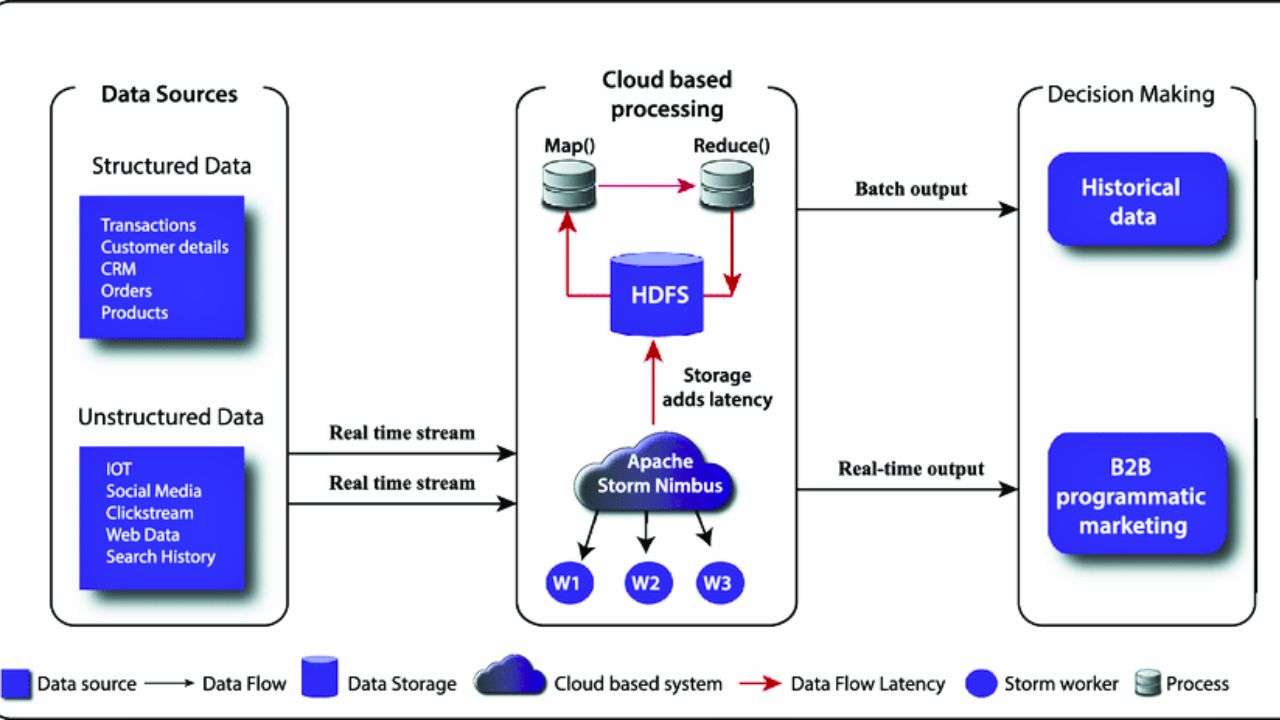

Design pipelines that ensure data flows seamlessly from source to processing systems and outputs. - Leveraging Cloud-Based Solutions:

Cloud platforms like AWS, Azure, and Google Cloud offer scalable and cost-effective real-time processing solutions. - Monitoring and Performance Optimization:

Use monitoring tools to identify bottlenecks and optimize system performance continuously.

6. Best Practices for Real-Time Data Processing

- Data Preprocessing Techniques:

- Filter irrelevant data to reduce processing loads.

- Aggregate data when individual events aren’t critical.

- Ensuring Data Security and Compliance:

- Encrypt data in transit and at rest.

- Comply with data protection regulations like GDPR and CCPA.

- Continuous Monitoring and Alert Systems:

- Implement dashboards to track system health.

- Set up alerts for anomalies or failures.

- Regular Testing and Updating Systems:

- Test real-time systems under load conditions.

- Regularly update software to incorporate the latest features and security patches.

7. Use Cases and Industry Examples

- Real-Time Fraud Detection in Finance:

Banks use real-time analytics to flag suspicious transactions, reducing fraud risk. - Dynamic Pricing in E-Commerce:

Retailers adjust prices in real-time based on demand, inventory levels, and competitor pricing. - Predictive Maintenance in Manufacturing:

IoT sensors provide real-time data on equipment health, enabling proactive maintenance. - Real-Time Analytics in Healthcare:

Healthcare providers monitor patient vitals in real-time, enabling immediate intervention when necessary.

8. Future Trends in Real-Time Data Processing

- Growth of AI-Driven Data Processing:

AI algorithms enhance the ability to process and analyze real-time data streams intelligently. - Edge Computing and IoT Integration:

Processing data closer to the source reduces latency and improves efficiency. - Advances in Machine Learning for Real-Time Applications:

Machine learning models are increasingly being deployed to provide predictive insights in real-time.

How to Get Started with Real-Time Data Processing

Implementing real-time data processing may seem daunting, but breaking it into manageable steps ensures a smooth transition. Here’s a step-by-step guide to help you navigate the process effectively:

1. Understand Your Needs and Goals

Start by identifying why you need real-time data processing and what you aim to achieve. Clearly define your use case to avoid unnecessary complexity.

- Key Questions to Ask:

- What business problems can real-time data processing solve?

- Which processes need immediate insights?

- What outcomes are you expecting (e.g., faster decisions, improved customer experience)?

- Example Use Cases:

- Monitoring customer interactions on your website to deliver real-time recommendations.

- Detecting equipment failures before they happen using sensor data in manufacturing.

Pro Tip: Prioritize use cases where the ROI of real-time processing is highest.

2. Evaluate and Choose the Right Tools and Platforms

Choosing the right tools is critical to success. Look for platforms and software that align with your needs.

- Key Considerations:

- Scalability: Can the tool handle increasing data volumes?

- Integration: Does it integrate seamlessly with your existing systems?

- Latency: What is the time lag between data input and processing?

- Cost: Does the tool fit within your budget?

- Popular Tools:

- Apache Kafka: Ideal for event-driven data processing.

- Spark Streaming: Great for analytics and big data processing.

- Amazon Kinesis: A cloud-based option for real-time streaming.

- Google Cloud Dataflow: Managed stream and batch data processing.

Pro Tip: Test multiple tools using small datasets before committing to one.

3. Define Your Data Sources and Pipelines

Identify the sources of your data and design pipelines to ensure smooth data flow.

- Examples of Data Sources:

- IoT sensors and devices.

- Social media feeds.

- Transactional data from e-commerce platforms.

- Server logs and system events.

- Building a Data Pipeline:

- Ingestion: Collect data from various sources using connectors or APIs.

- Processing: Use tools like Apache Flink to process data in real time.

- Output: Send processed data to dashboards, databases, or other systems.

Pro Tip: Use message brokers like RabbitMQ or Kafka to manage data flow effectively.

4. Start Small with a Pilot Project

Don’t try to implement real-time processing across your organization at once. Begin with a pilot project targeting a specific use case.

- Why Start Small?:

- Easier to manage and troubleshoot issues.

- Provides measurable results to justify further investment.

- Example Pilot Project:

- If you’re in retail, start with real-time inventory updates to improve stock accuracy.

Pro Tip: Use KPIs (Key Performance Indicators) to measure the success of your pilot project. Examples include data latency, system uptime, and cost efficiency.

5. Implement Robust Monitoring and Alerting Systems

Once your pilot project is live, continuous monitoring is essential.

- What to Monitor:

- Data flow: Ensure smooth ingestion and processing.

- Latency: Monitor the time between data arrival and processing.

- Errors: Track system failures or missed events.

- Tools for Monitoring:

- Prometheus: Open-source monitoring and alerting toolkit.

- Grafana: Visualizes real-time data metrics.

- AWS CloudWatch: Monitors resources and applications on AWS.

Pro Tip: Set up automated alerts for anomalies to address issues proactively.

6. Focus on Data Security and Compliance

Real-time processing often involves sensitive data. Prioritize security and compliance to protect your business and users.

- Key Security Practices:

- Encrypt data at rest and in transit.

- Use secure authentication methods like OAuth.

- Limit access to sensitive data based on roles.

- Compliance Considerations:

- GDPR (General Data Protection Regulation) for businesses in the EU.

- CCPA (California Consumer Privacy Act) for handling consumer data in California.

- HIPAA (Health Insurance Portability and Accountability Act) for healthcare-related data.

Pro Tip: Conduct regular security audits to identify and mitigate vulnerabilities.

7. Scale Gradually

Once your pilot project succeeds, gradually expand your real-time data processing capabilities.

- Steps for Scaling:

- Add more data sources to your pipeline.

- Optimize infrastructure to handle increased loads.

- Train your team on advanced features of the tools you’re using.

- Challenges During Scaling:

- Ensuring system reliability as data volumes increase.

- Balancing cost efficiency with performance.

Pro Tip: Leverage cloud solutions like AWS, Azure, or Google Cloud to scale resources dynamically based on demand.

8. Continuously Optimize and Innovate

Real-time data processing is not a one-time setup; it requires ongoing improvements.

- Optimization Strategies:

- Regularly review and refine data pipelines to eliminate bottlenecks.

- Experiment with new tools and technologies.

- Update algorithms to improve data analysis accuracy.

- Staying Ahead with Innovation:

- Explore emerging trends like edge computing for faster data processing.

- Integrate AI and machine learning for predictive analytics.

Pro Tip: Encourage cross-departmental collaboration to find innovative use cases for real-time data.

Conclusion

Real-time data processing has become a cornerstone of modern business operations. From fraud detection to predictive maintenance, its applications are vast and impactful. By implementing the strategies and tools outlined in this guide, you can unlock the full potential of real-time data processing, drive growth, and stay ahead in 2024. Ready to embrace the power of real-time data? Start small, think big, and let the data flow.